The following documents will be updated and/or migrated to recent documentation.

Two algorithms

Two algorithms for the instantiation of structures of musical objects

Bernard Bel

This is an extended and revised version of the chapter: Symbolic and Sonic Representations of Sound-Object Structures published in M. Balaban, K. Ebcioglu & O. Laske (Eds.) “Understanding Music with AI: Perspectives on Music Cognition”, AAAI Press (1992, p. 64-109).

Abstract

A representational model of discrete structures of musical objects at the symbolic and sonological levels is introduced. This model is being used to design computer tools for rule-based musical composition, where the low-level musical objects are not notes, but “sound-objects”, i.e. arbitrary sequences of messages sent to a real-time digital sound processor.

“Polymetric expressions” are string representations of concurrent processes that can be easily handled by formal grammars. These expressions may not contain all the information needed to synchronise the whole structure of sound-objects, i.e. to determine their strict order in (symbolic) time. In response to this, the notion of “symbolic tempo” is introduced: the ordering of all objects in a structure is possible once their symbolic tempos are known. Rules for assigning symbolic tempos to objects are therefore proposed. These form the basis of an algorithm for interpreting incomplete polymetric expressions. The relevant features of this interpretation are commented.

An example is given to illustrate the advantage of using (incomplete) polymetric representations instead of conventional music notation or event tables when the complete description of the musical piece and/or its variants requires difficult calculations of durations.

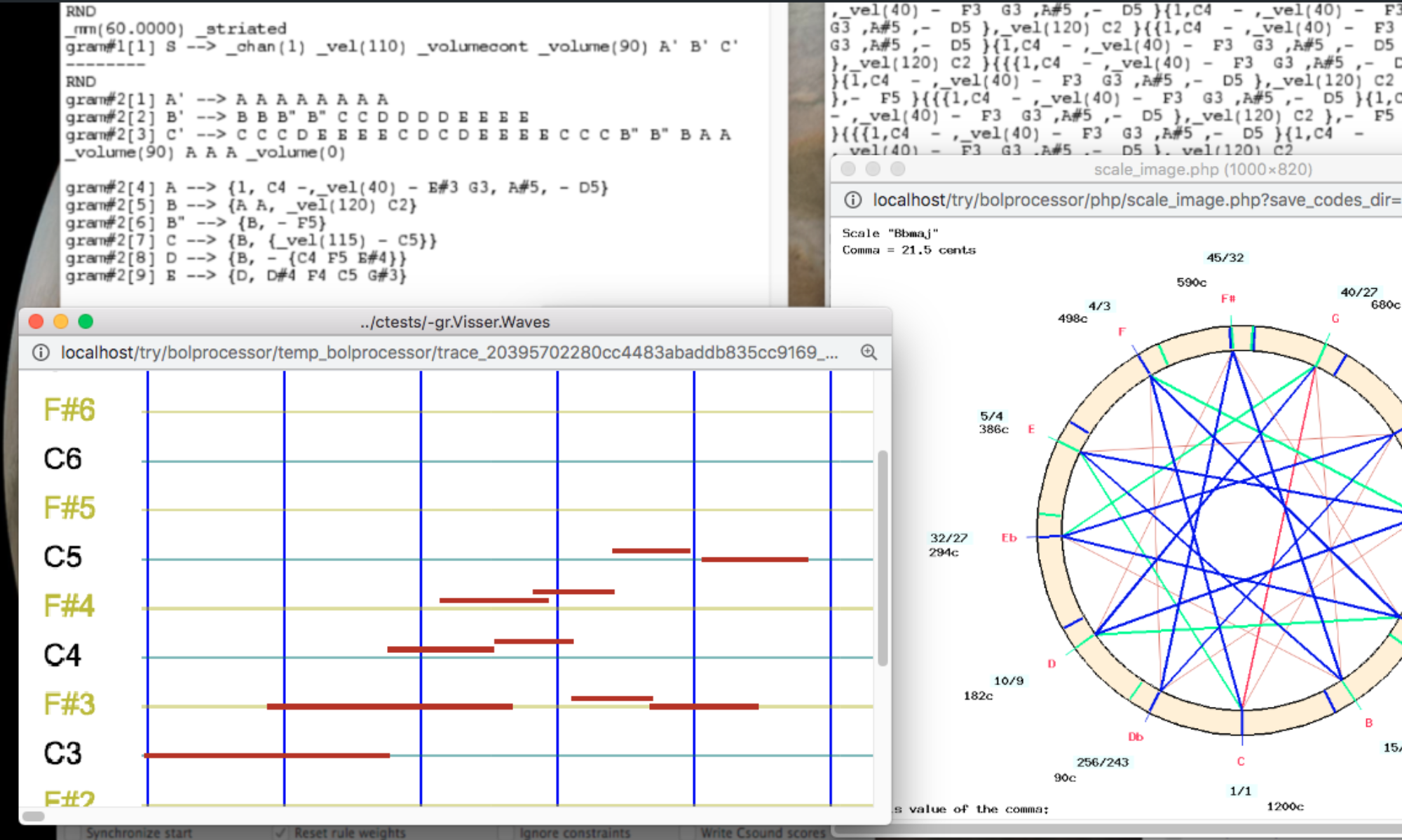

Given a strict ordering of sound-objects, summarised in a "phase table" representing the complete polymetric expression, the next step is to compute the times at which messages should be sent. This requires a description of "sound-object prototypes" with their metric/topological properties and various parameters related to musical performance (e.g. "smooth" or "striated" time, tempo, etc.). These properties are discussed in detail, and a time-polynomial constraint satisfaction algorithm for the time-setting of sound objects in a polymetric structure is introduced. Typical examples computed by this algorithm are shown and discussed.

Excerpts of an AI review of this paper (Academia, June 2025)

Summary of the Work

The manuscript introduces a model for representing and instantiating musical structures made of discrete sound-objects. It explores separate representations for symbolic and physical time, then proposes methods to synchronize concurrent musical processes and to compute the precise scheduling of messages (or events) destined for a sound processor. The author conceptualizes “smooth” and “striated” time, describes “polymetric expressions” as high-level objects that can be incomplete, and provides algorithms to infer missing timing details. The paper merges formal language approaches, real-time considerations, and practical examples of usage in a software environment called Bol Processor.

Comprehensive Model of Time

- The clear distinction between symbolic time (strictly ordered events) and physical time (metronomic or arbitrary “striated” structures) provides an insightful way to handle simultaneous or overlapping musical events.

- The notion of “smooth” versus “striated” time is conjugated with helpful examples, making it straightforward to envision both contexts of performance.

Polymetric Expressions and Incomplete Descriptions

- The approach to polymetric expressions accommodates incomplete representations that can later be resolved by an algorithmic interpretation. This is particularly valuable for composers and researchers looking to experiment with generative or rule-based systems without being forced into fully specified notation from the outset.

- The examples and diagrams showing how one can map polymetric structures to a phase diagram illustrate the concept effectively.

Synchronization and Constraints

- The proposed synchronization algorithm handles concurrency by carefully propagating symbolic tempos across sequences. This helps manage cases where direct numeric durations are not known beforehand.

- The introduction of properties such as pivot alignment (PivBeg, PivCent, etc.), truncation, elasticity, and relocatability demonstrates thorough consideration of realistic performance scenarios (e.g., rubato, partial overlaps). The concept of locating a sound object by its pivot was inspired by Marco Stroppa's work at IRCAM in 1990.

Practical Implementation and Examples

- The paper gives a thorough demonstration of how these algorithms integrate with a functional software environment, including tangible data structures (phase tables, instance tables) and step-by-step flowcharts.

- Musical examples and references to real-time computer music systems underscore the applicability of the approach.

Rich Technical Detail

- The stepwise pseudo-code for the time-setting algorithm is extensive and transparent. This level of detail can guide further experimental or production-level implementations.

- The complexity analysis (O(nmax² · imax³) in the worst case) provides an understanding of potential computational limits, helpful for anyone planning to use or extend these algorithms.

Potential Impact

- This work is useful to composers, researchers in algorithmic composition, and developers of music software who need robust synchronization methods that go beyond simple “note-by-note” scheduling.

- The proposed methods can enable more flexible representations of time and concurrency, allowing for richer generative or improvisational projects.

Summary of Review

Overall, this paper provides a detailed, formalized framework for resolving concurrent musical structures. It bridges the gap between abstract symbolic grammars and practical real-time performance considerations. Researchers and musicians interested in algorithmic composing or advanced computer-assisted composition tools will likely find its approach compelling, especially given the extensive examples and robust pseudo-code.

Download this paper (PDF)

Skip to PDF contentBol Processor, an overview

This PowerPoint slideshow is a detailed presentation, along with sound examples, of Bol Processor in its BP2 version.

➡ Download the slideshow (Zip file, 150 Mb)

Presentations

Cambridge poster

Is text an adequate tool for modelling musical analysis, composition and performance?

This poster was presented at the conference Language and Music as Cognitive Systems in Cambridge (UK), on 11-13 May 2007.

➡ Download the poster (20 Mb PDF)

Shapes in Rhythm

This composition was part of the choreographic work CRONOS created by Andréine Bel and performed at the National Centre for the Performing Arts (Mumbai, India) and the Shri Ram Center (Delhi) in October 1994.

The following grammar "-gr.ShapesInRhythm" was written (in about 2 days) by Andréine and Bernard Bel.

There were six dancers on the stage: Smriti Mishra, Olivier Rivoirard, Vijayshree Chaudhary, Arindam Dasgupta, Somenath Chatterjee and Suresh Shetty.

The musical structure consists of 9 parts using very different sound patches played on Roland D-50 synthesiser with a Musitronics extension card. Each part is based on the rhythmic structure of a tihai: three equal repetitions of a rhythmic pattern, interspersed with two equal rests, with the constraint that the last unit must fall on the first beat of the rhythmic cycle. The cycle has 16 beats, or tintal in North-Indian music/dance. Tihais are basic figures of Kathak dance and tabla drumming.

On an old Mac IIci, this would take 14 minutes to produce and time! For this reason, subgrammar instructions have been optimised: instead of the standard "RND" mode, "ORD" has been used wherever possible, otherwise "SUB1", whose process is a unique "parallel" rewrite of the work string.

Playing the piece required a 30-millisecond quantization setting which reduced the size of the phase table by a factor of 222. See Complex ratios in polymetric expressions for a detailed explanation.

At the time this grammar was written, BP2 did not support articulation or glossaries. This grammar has highlighted the need for such features.

Smooth time and time patterns (with time-objects t1, t2 and t3) were used because the dancers expected the first sections to start slowly and speed up. Thus, the composition starts at metronome 60, continues at metronome 80 and ends at 88. In this composition, however, striated time would be a much better option because speed changes can be managed using the "_tempo()" tool: forget time patterns, set the metronome to 88 and insert _tempo(60/88) then _tempo(80/88) and finally _tempo(1) to change speeds. This work was an incentive to implement the "_tempo()" performance tool…

Click this link to display the score of this piece.➡ Video at the bottom of this page.

An extract of this work is shown in the following video from 3mn 36s to 3mn 48s:

References

Related work

Reference manual (BP2.9.8)

The complete reference manual for version 2 of the Bol Processor

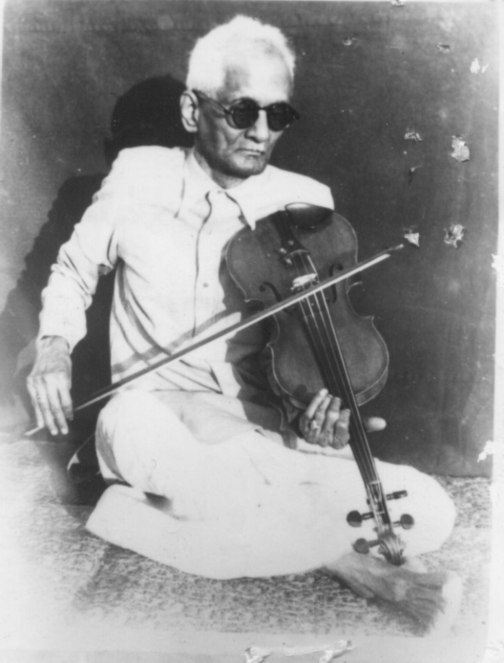

Computing ‘ideas’

A composition in Carnatic musical style by Srikumar K. Subramanian, June 1995.

Name: "-gr.trial.mohanam"

This is a non-stop improvisation of variations in a style similar to Carnatic music. The compositional approach here is to decide that each variation should contain 32 notes and can use up to 20 "ideas". To do this, a flag called Ideas is set to 20 at the beginning, and it is decreased by 1 unit in certain rules (such as GRAM#2[2]) or 2 units in others (such as GRAM#2[3]). See the page Flags in grammars for more details.

Rules in subgrammar #3 can only be candidates if there are few ideas left, but they do not reduce ideas.

Rules in subgrammar #6 use wildcards to create patterns.

Rules in subgrammar #9 create "effects" by changing velocities.

In the ‘Improvize’ mode, the values of flags and rule weights can be carried over from one variation to the next. This allows them to be used to trigger/inhibit events at any distance from those that created/modified them.

The following output was recorded on a Roland D-50 synthesiser.

Undetermined rests in dance performance

The polyrhythmic piece "765432" composed by Andréine Bel for her CRONOS dance production (1994) illustrates the use of undetermined rests. Six dancers were on stage: Suresh Shetty, Smriti Mishra, Olivier Rivoirard, Vijayshree Chaudhary, Arindam Dasgupta and Andréine Bel.

In this grammar, "SUB1" indicates a substitution that only needs to be performed once. Using it to replace "SUB" saves computation time.

Glyphs "…" are undetermined rests, i.e. silences whose duration is a priori unknown and will be precisely calculated by the polymetric expansion algorithm.

The whole structure is based on regular arithmetic divisions. For example, Suresh moves at "speed 7", Smriti at "speed 6" and Olivier at "speed 5".

"CR47" and "C46" are patches from the Roland D-50 synthesiser.

The following output was recorded on a Roland D-50 synthesiser.

This performance was part of the choreographic work CRONOS performed at the National Centre for the Performing Arts (Mumbai, India) and the Shri Ram Center (Delhi) in October 1994. An excerpt is shown from 4mn 50s to 5mn 10s: