The aim of the current project is to transcribe a musical input (given by a stream or a table of MIDI events) to the most comprehensible polymetric notation. An extension of this would indeed be to accept a sound signal as input.

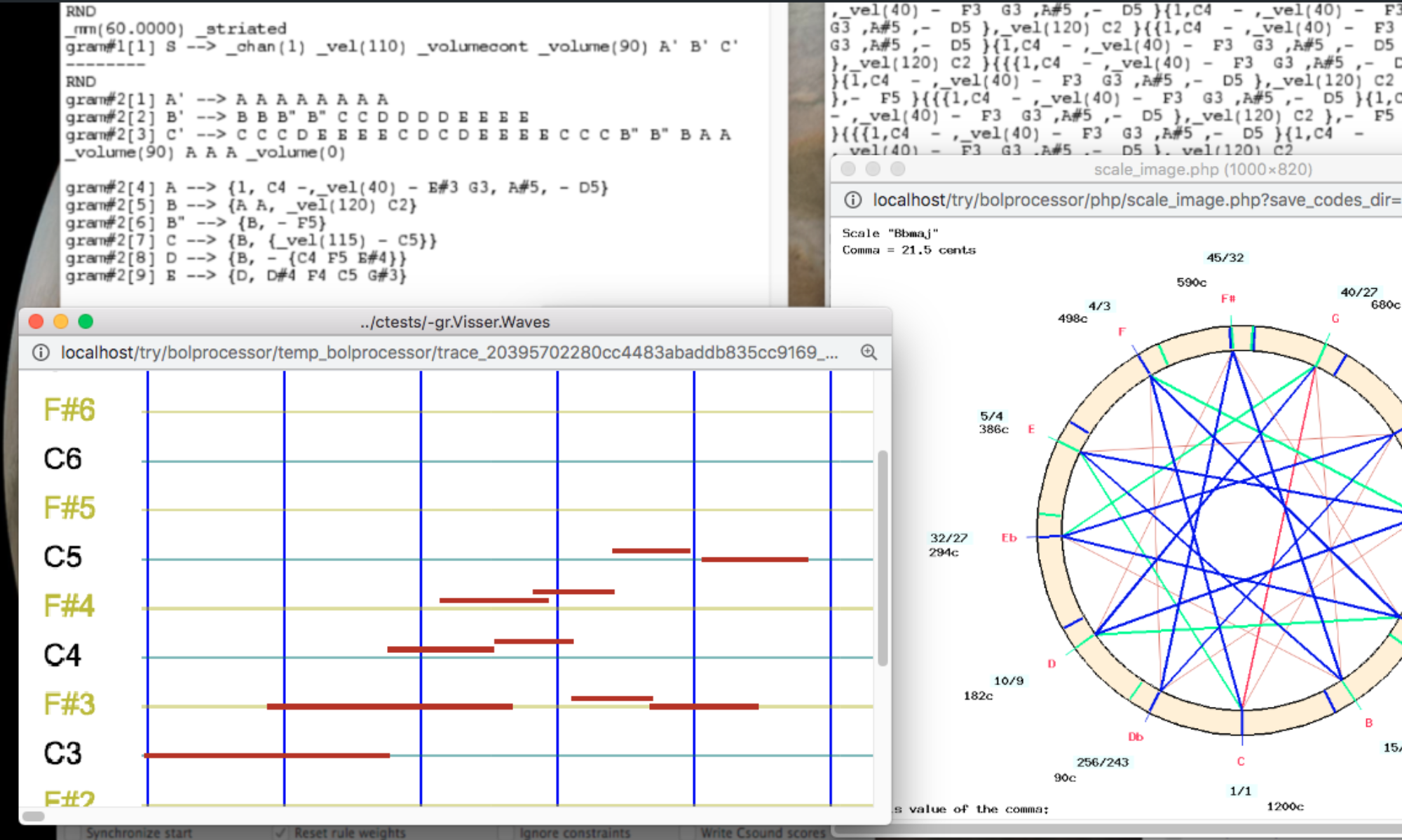

Polymetric notation is one of the main features of the Bol Processor project, which is here considered as musicological research rather than software development. Other features include tonal modelling and the time-setting of sound-objects.

Polymetric expressions are a convenient product of rule-based music composition. They are represented as strings of symbols that embody a semi-lattice structure, here meaning a tree of sound objects with ties. Polymetric notation, generally speaking, is a sequence of polymetric expressions that is processed as a single polymetric expression.

To achieve this, we plan to train a transformer (a type of neural network), using sets of polymetric expressions alongside their associated MIDI file renderings, both as standard MIDI files and in tables of events. As we require large datasets that cover a wide range of musical styles, the plan is to create these sets using existing musical scores.

The process described on the Importing MusicXML scores page demonstrates the ability to "translate" any Western musical score (in its digital format) to a polymetric expression. This process is irreversible because polymetric expressions cannot be fully converted into human-readable musical scores, despite them carrying time structures that sound "musical". A typical example of this is the use of undetermined rests, as these imply further processing of the structure in order to set exact durations.

Datasets that associate polymetric expressions with their MIDI file renderings contain identical pitch and timing information on both sides. Since both descriptions are complete and not redundant, the matching is a game of full information.

At a later stage, the transformer should also be able to handle streams of MIDI events created by humans or random effects, where the timings are not based on a simple framework. Therefore, a quantization of timings is needed to adjust the stream before it is analysed. This quantization is already operational on the Bol Processor — see the Capture MIDI input page.

The creation of datasets

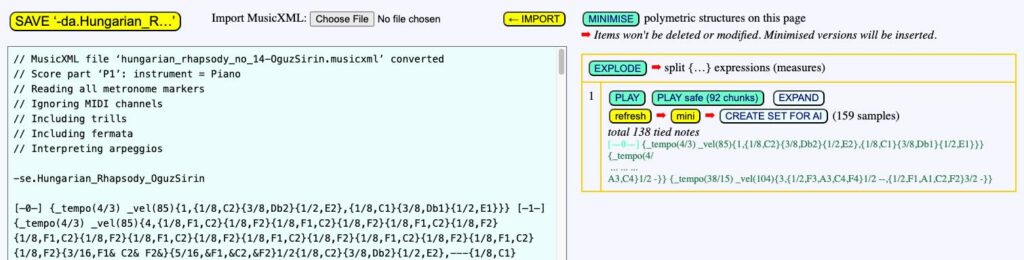

Datasets are created from musical works imported from MusicXML scores. For instance:

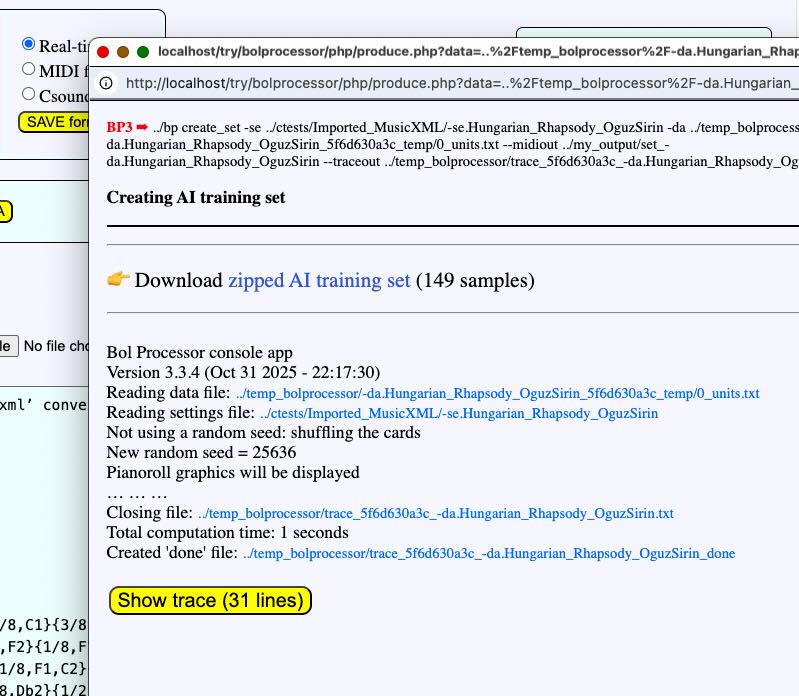

Click the CREATE DATASET FOR AI button.

A dataset is created and can be downloaded in a zip file:

set_-da.Hungarian_Rhapsody_OguzSirin.zip.

More sets can be created using the same musical work. Clicking the CREATE DATASET FOR AI button again would produce the same set, as it is built from a sequence of random numbers that is not reinitialised. To ensure the new set is different, click the refresh button. When downloading it, the system will automatically assign it a different name, e.g.:

set_-da.Hungarian_Rhapsody_OguzSirin (1).zip.

Datasets of minimised polymetric structures

The mini button close to the refresh button modifies the dataset so that all (eligible) polymetric structures are minimised. In a minimised structure, some rests with explicit durations are replaced with undetermined rests (notated '…') without any loss of timing accuracy. Read the Minimising a polymetric structure page for more details. These sets are smaller in size than the ones they are derived from because only eligible structures have been retained.

Once samples in the training set have been minimised, the CREATE DATASET FOR AI button is changed to CREATE minimised DATASET FOR AI.

The sets of minimised structures are downloaded with specific names that mention the 'mini' feature, such as:

set_-da.Hungarian_Rhapsody_OguzSirin_mini.zip

When training an AI, these sets should be used separately from standard sets because they are expected to train the transformer to guess the proper locations of undetermined rests. Nevertheless, it could be interesting to compare related (standard versus minimised) samples in order to model changes between standard and minimised polymetric structures.

The content of a dataset

The first dataset created in this demo contains 160 samples. These samples are text files named 1.txt, 2.txt, etc., associated with MIDI files 1.mid, 2.mid, etc., and tables of events (see below) 1.tab, 2.tab, etc., 1.tsv, 2.tsv, etc. A text file whose name ends in "_units.txt" contains all the sample text files, enabling these samples to be copied in a single piece to a data project. Clicking on the word "samples" displays it in a pop-up window.

One of the text files contains for instance:

{_tempo(4/3) _vel(85){2,{3/16,D6& E6& D7&}{3/16,&D6,&E6,&D7}{1/8,C6,C7}{3/8,B5,B6}{1/8,A5,A6}{3/8,G#5,G#6}{1/8,A5,A6}{3/8,B5,B6}{1/8,G#5,G#6},{3/8,G#3}{1/8,D4}{3/8,B4}{1/8,E4}{3/8,E3}{1/8,B3}{3/8,G#4}{1/8,E4}}} {_tempo(4/3) _vel(85){2,{3/8,A5,A6}{1/8,G#5,G#6}{3/8,A5,A6}{1/8,B5,B6}{3/8,C6,C7}{1/8,B5,B6}{3/8,C6,C7}{1/8,D6,D7},{3/8,A3}{1/8,D4}{3/8,C5}{1/8,A4}{3/8,E3}{1/8,C4}{3/8,A4}{1/8,E4}}}

This polymetric expression covers 2 measures of the musical score. Clicking the Refresh button (or reloading the page) slices the score randomly into chunks containing between 1 and 5 measures. The upper limit of 5 has been set arbitrarily and may be revised at a later date. Every time the Refresh button is clicked, a new slice is created, resulting in a different dataset.

The idea is twofold: (1) the transformer should be trained to recognise sequences of polymetric expressions, and (2) tied notes may span more than one measure. A tied note in the above example is the pair "D6& &D6" (read more). Each sample contains only complete pairs of tied notes. This means that any notes whose ties are not present in the sample are untied for the sake of consistency.

Note that the timings of the polymetric expression and the MIDI file are identical, as the metronome is automatically set to a default tempo of 60 beats per minute when the MIDI file samples are created. In the above phrase, the tempo is set to 4/3, which is equivalent to a metronome set at 80 bpm.

Tables of events

The tables of events provide an easy-to-read representation of the contents of MIDI files.

Two kinds of text files are created.

1) The file with extension "tab" lists MIDI events in four columns. The leftmost column contains the timing of each event in milliseconds. For instance, the following text (from Couperin's Les Ombres errantes):

{_vel(64) {25/6, D5 1/6 {2, G5} F5, {25/6, C5 B4 4/3 B4& &B4 C5& &C5 C5&}, {25/6, G3 1/6 G4 {1/8, Ab4 G4} {7/8, Ab4} _legato(20) A4}}} {_vel(64) {4, {2, &C5 D5& &D5 _legato(20) C5} _legato(0) C5 {1, B4 {2, C5 B4 A4} B4}, F5 Eb5 {1/8, _legato(20) Eb5} {7/8, _legato(0) D5} 1, _legato(0) {1/8, B4 A4} {7/8, B4} C5 F4 G4}} {_tempo(9/10) _vel(64) {4, {G4, C5} Eb4 {1, D4 1} _tempo(26/27) Eb4, Eb4 {3, 1 C4& &C4 B3 1 C4}, C4 C3 G2 C3}}

which can be minimised to:

{_vel(64) {25/6,D5 … {2,G5}F5,{25/6,C5 B4 … B4& &B4 C5& &C5 C5&},{25/6,G3 … G4 {1/8,Ab4 G4}{7/8,Ab4}_legato(20) A4}}}{_vel(64) {4,{2,&C5 D5& &D5 _legato(20) C5}_legato(0) C5 {1,B4 {2,C5 B4 A4}B4},F5 Eb5 {1/8,_legato(20) Eb5}{7/8,_legato(0) D5}1,_legato(0) {1/8,B4 A4}{7/8,B4}C5 F4 G4}}{_tempo(9/10) _vel(64) {4,{G4,C5}Eb4 {1,D4 1}_tempo(26/27) Eb4,Eb4 {3,1 C4& &C4 B3 - C4},C4 C3 G2 C3}}

is converted to:

0 144 74 64

0 144 72 64

0 144 55 64

500 128 72 0

500 144 71 64

1000 128 74 0

1000 128 55 0

1001 128 71 0

1165 144 79 64

1165 144 67 64

1666 144 71 64

etc.

The "tab" table contains only NoteOn/NoteOff events. However, the MIDI file may contain controls, such as volume, panoramic, pressure, pitchbend, modulation, pedal on/off, and more in the range 65 to 95, which are performance parameters in the Bol Processor score. Therefore, a more detailed format of tables of events is needed to list them.

2) The text file with extension "tsv" contains a detailed list of (all) MIDI events carried by the MIDI file.

The following example is the opening of Listz's La Campanella.

{_tempo(97/60) _vel(52) {3,2 {1/2,D#6,D#7} {1/2,D#6,D#7},1/2 {1/2,_switchon(64,1) D#4,D#5} {1/2,D#4,D#5} {1/2,D#4,D#5} 1}} {_tempo(97/60) _vel(52) {3,{1/2,D#6,D#7} 3/2 {1/2,D#6,D#7} {1/2,D#6,D#7},1/2 {1/2,_switchoff(64,1) _switchon(64,1) D#4,D#5} {1/2,D#4,D#5} {1/2,D#4,D#5} -}}

Since the Bol Processor can capture a MIDI stream and create a "tsv" table of events with quantized timings (see Capture MIDI input), training a transformer to convert these tables into polymetric structures could eliminate the need to decrypt MIDI files and quantize their timings.

A collection of datasets

Several collections of datasets can be found in the folder:

https://bolprocessor.org/misc/AI/samples

These can be used for training a transformer of your choice. We recommend creating more sets from more musical works to achieve a better training. Run the Bol Processor and browse imported MusicXML scores in the ctests/Imported_MusicXML workspace, or import more works from a dedicated MusicXML server.

A test of the transformer's ability to "translate" MIDI files (or tables of events) to polymetric notation will first be to retro-convert (to polymetric expressions) all samples. Then, if successful, retro-convert the MIDI files of complete musical works used for the training.

What follows…

Once the correct transformer type and optimum dataset size have been identified, we will work on the following extensions:

- Translate MIDI streams produced by human interpreters for which precise timing is not guaranteed.

- (Optional) Extend the AI recognition to the use of undetermined rests as it provides a more simple polymetric structure.

- Use a sound to MIDI converter to convert a sound input to polymetric notation.

- Let us assume that the musical input consists of fragments separated by silences. Convert these fragments into polymetric structures and then search for regularities in the rhythmic and tonal structures to create variations in the same style. Formal grammars will be employed for this purpose.

🛠 Work in progress! Please contact us to participate.